Beyond the 1GB limitation of varlena

This article is a part of the PostgreSQL Advent Calendar 2016.

According to the request by Joe Conway (@josepheconway), I wrote this article in English.

I like to share the discussion we had at the PostgreSQL developer unconference on the day before PGconf.ASIA 2016, and the related idea of mine.

PostgreSQL supports variable length data types; like text, bytea, json, numeric, array and so on.

These data types individually have their own characteristics and own internal format, however, all of them are built on a common structure to represent variable length field; that is called varlena.

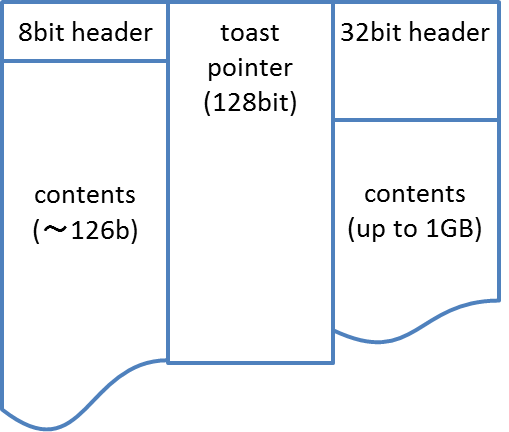

It has very simple internal format. Contents of the variable length fields are followed by either 1-byte or 4-bytes header.

We can identify the header type by the least bit of the first 1 byte. If least bit of the first byte, it means 1-byte header, elsewhere, 4-byte header.

In case of 4-byte header, the second bit is also used to show whether it is compressed or not. Therefore, rest of 30-bits can be available to represent the contents length, so, maximum length of the variable length field is 1GB.

A special case exists if the first byte is 00000001. It is an external TOAST reference which consists of OID of TOAST table and unique ID within TOAST table.

Below is the source code comment at include/postgres.h.

: * Bit layouts for varlena headers on little-endian machines: * * xxxxxx00 4-byte length word, aligned, uncompressed data (up to 1G) * xxxxxx10 4-byte length word, aligned, *compressed* data (up to 1G) * 00000001 1-byte length word, unaligned, TOAST pointer * xxxxxxx1 1-byte length word, unaligned, uncompressed data (up to 126b) :

My concern is we have no way to represent a larger variable length datum more than 1GB.

It is quite natural for users who want to process the maximum available data size as long as system capability allows. It is not uncommon for the recent GPU models to have 10GB class device memory or more.

On the other hands, due to the restriction of variable length datum of PostgreSQL, we cannot have a datum larger than 1GB. It also means we cannot provide a large matrix (= 2D-array) onto PL/CUDA function at once. It is an unignorable restriction if a problem user want to solve by PL/CUDA is unavailable or expensive to split into multiple portions.

According to the background, we discussed a few options to support variable length datum larger than 1GB.

64bits varlena header

The first idea was straightforward but got less interest because 99% of existing variable length data types are satisfied with 1GB limitation.

If we have one more varlena header, VARDATA() and VARSIZE() which are widely used in the PostgreSQL core and extensions need to have branch operation inside the macro, and it is not easy to justify the penalty for this niche usage.

Use of large-object and its ID instead

The second idea was a suggestion from audience. Now PostgreSQL has a feature of large object which allows to store up to 4TB data chunk with a unique identifier. If PL/CUDA function supports ID of large object, instead of varlena datum, PL handler can extract larger data chunk by itself.

However, here is a problematic scenario. It requires users to construct large objects preliminary. It is inconvenient when user wants to deal with a large matrix constructed on the fly. For example, the query below constructs a matrix based on the underlying table scan with qualifier. The qualifier can be changed for each execution.

SELECT * FROM matrix_unnest(

(SELECT my_plcuda_function(Q.matrix, D.matrix)

FROM (SELECT array_matrix(a,b,c) matrix

FROM table_q

WHERE tag LIKE '%abc%') Q,

(SELECT array_matrix(x,y,z) matrix

FROM table_d

WHERE tag LIKE '%xyz%') D

)

)In addition, creation of a large object makes unnecessary i/o; which leads performance slow-down.

Special type that includes indirect reference

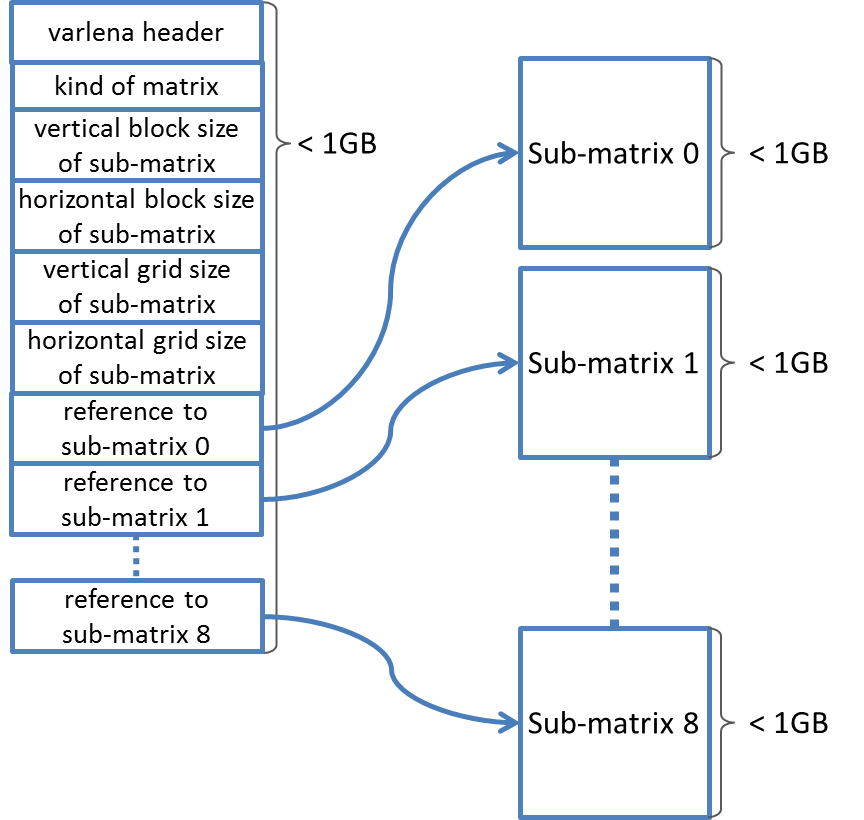

A overall consensus was to define a special data type which support indirect references to data chunks less than 1GB. If only narrow use-case wants to have a datum larger than 1GB, it is quite natural to define a special data type for the purpose.

My interest is representation of matrix in database.

In case of matrix, it has less necessity to have all the items in a flat memory chunk.

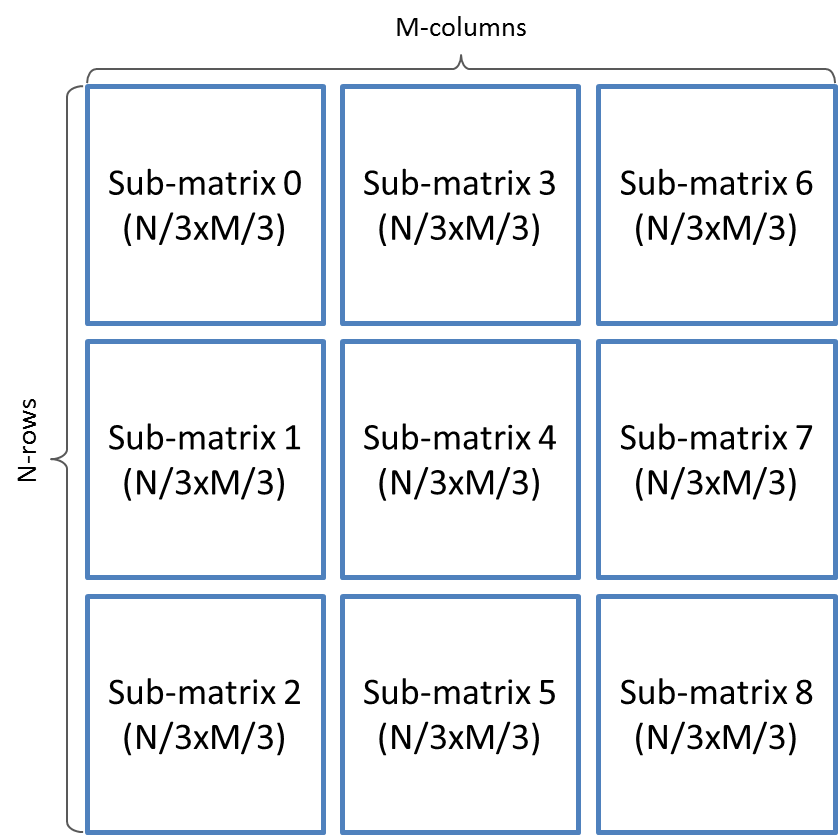

If we have 8GB of matrix, we can split it into 9 portions to keep individual chunk size less than 1GB.

Then, once we define a special matrix type that consists of small metadata and indirect references to the chunks less than 1GB, it is available to represent a big matrix larger than 1GB as a usual SQL data type.

A remaining problem is serialization/deserialization when the matrix data type is saved/loaded.

Right now, PostgreSQL saves contents portion of the variable length datum onto the storage as is, thus, pointers to reference sub-matrix has to be serialized appropriately, however, we have no infrastructure to manipulate type specific data structure on toast_insert_or_update() which set up physical tuple for INSERT/UPDATE.

Likely, pg_type needs to have an optional callback functions for serialization/deserialization. It shall be a mandatory requirement if data structure has indirect reference to other memory chunks.

I expect the serialization callback will use TOAST relation to save a large but less than 1GB chunks, then put its unique ID instead of the pointers. We will be able to have another advantage in this approach, because all the sub-matrix we have to update are the portion actually updated. If some of sub-matrix were not updated, we don't need to delete old toast pages and insert new ones. It will make a performance benefit than existing flat varlena structure.

The timing for deserialization needs a bit more consideration. Because heap_tuple_fetch_attr handles deserialization of the existing flat varlena, but no type OID is supplied to the function. It is not a good option to change the function prototype because many existing code already uses this function without type OID.

We have two another options here. The first one packs type OID within the serialized structure. It needs to define a new VARTAG_* label to distinct from the existing flat varlena. The second one is delayed load because indirectly referenced data chunks will not be used without functions/operators which support the data type. It enables not to load unreferenced chunk, however, it is uncertain whether functions/operators can manipulate a value supplied as an argument. *1

Last, even if we can have variable length datum larger then 1GB from the viewpoint of data format, it is never small data chunks. It involves not a small amount of i/o (or memory copy) stuff.

Therefore, it is a significant to have special optimization based on the knowledge for usual use case of the types.

For example, some workloads takes sparse matrix which have small amount of non-zero values, but mostly zero. In this case, type may be able to assume empty sub-matrix are all-zero instead of data size reduction.

Diagonal matrix is also often used, in case when valid values are located around the diagonal axis only.

I hope making a proof of the concept patch near future, then have a discussion at pgsql-hackers.

*1:If not acceptable, we may need to load sub-matrix multiple times when a particular matrix object is referenced by multiple functions/operators.