I've checked up an idea whether it is feasible to implement, or not, since I saw a presentation by Tim Child in Ottawa last year.

Is it possible to accelerate sequential-scan of PostgreSQL?

We often see sequential-scan instead of index-scan in case of queries with complex calculation. I thought GPU works fine in these cases.

I tried to implement a module that works as FDW (foreign data wrapper) of PostgreSQL, since I could have a time to develop during Christmas vacation.

The name of module is PG-Strom that is pronounced as shutt-row-me; being pronounced in German style.

Its name originates "Streaming Multiprocessor" that is a unit of process in GPU.

Of course, it assumes existing interface of FDW, so it is unavailable to update, and some more restrictions like sort or aggregate functions. However, it achieves good performance as a prototype.

Note that the following description is based on author's understanding (quite newbie for CUDA), so please point out if something incorrect.

Benchmark

Even though it is an arbitrary testcase, I tries to execute a query that scans a table with 20-million records in my development environment. NVidia's GTS450eco is installed.

-- A regular table

mytest=# SELECT count(*) FROM pgbench_accounts

WHERE (xval - 23.45) * (xval - 23.45) + (yval - 54.32) * (yval - 54.32) < 100;

count

--------

629291

(1 row)

Time: 29030.738 ms

-- with PG-Strom

mytest=# SELECT count(*) FROM pgstrom_accounts

WHERE (xval - 23.45) * (xval - 23.45) + (yval - 54.32) * (yval - 54.32) < 100;

count

--------

629291

(1 row)

Time: 2337.475 msIt is a surprising result. PG-Strom returns the result with 10 times faster!

In addition, we may be able to expect more improvement because GPU is quite cheap one (about 100Euro).

Let's try again. I reduced the number of records (5-million records, with shared_buffer=960MB) to store whole of the table on the buffer; to eliminate affects from disk-I/O.

-- A regular table

mytest=# SELECT count(*) FROM t1

WHERE (xval - 23.45) * (xval - 23.45) + (yval - 54.32) * (yval - 54.32) < 100;

count

--------

157800

(1 row)

Time: 4106.045 ms

-- with PG-Strom

mytest=# SELECT count(*) FROM t2

WHERE (xval - 23.45) * (xval - 23.45) + (yval - 54.32) * (yval - 54.32) < 100;

count

--------

157800

(1 row)

Time: 393.346 msWow!

Idea

PostgreSQL iterates (1) fetch a tuple from storage (or buffer), and (2) evaluation of qualifier of WHERE clause according to contents of the tuple during sequential-scan. Thus, it unavailable to handle (2) during execution of (1), and also unavailable to handle (1) during execution of (2). An idea is CPU multi-threading, however, it is hard to implement because PostgreSQL does not have thread-safe design including memory or I/O management.

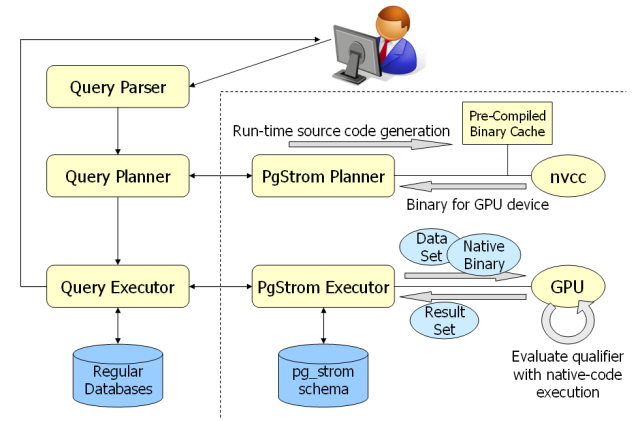

PG-Strom entrusts GPU device the (2) portion (evaluation of WHERE clause), and make CPU focus on I/O stuff.

The calculation stuff shall be handled on GPU device side asynchronously, so it shall be finished during CPU handles more I/O stuff.

However, GPU is not a magic bullet for anything.

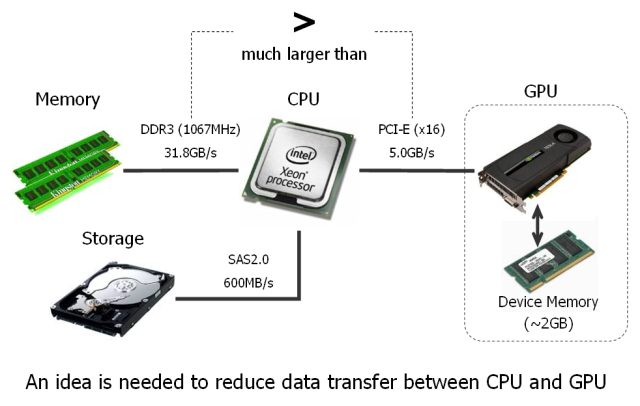

We need to transfer data to be calculated by GPU into device memory mounted on GPU. It requires to transfer via PCI-E that has narrow bandwidth compared to the one between CPU and Memory. (Max 2.5GB/s in x16 lane)

Thus, amount of data to be copied should be smaller as we can as possible.

In most cases, it is rare case that WHERE clause reference all the columns within the table, because the purpose of query is to fetch a record that satisfies the condition of XXXXX.

PG-Strom handles execution of WHERE clause on GPU device. At that time, all copied to GPU device are contents of referenced columns. I expect 10%-20% of table size needs to be copied to GPU device via PCI-E, because numeric data is smaller than text data.

Data structure and Asynchronous process

The internal data structure of PG-Strom is organized according to the above idea.

For example, when we create a foreign table with four-columns: a, b, c and d, PG-Strom creates tables corresponding to each columns within pg_strom schema. These tables have rowid (int64) to identify a particular row and an array-type to store multiple original data.

Even though it is a column-oriented data structure recently well used, it does not go out of transaction management of PostgreSQL, PG-Strom does not need to touch them.

This type of data structure allows PG-Strom to load data into GPU devices via PCI-E bus effectively.

The contents read from the databases are temporarily stored on fixed-length buffer called "chunk", then it shall be moved to GPU devices and calculated, and the results shall be written back at last. These steps are executed asynchronously, thus, CPU can scan the database concurrently to set up next chunk. This design enables to utilize both of CPU and GPU.

Just-in-time compile and native-code execution

CPU and GPU have its own advantage and disadvantage for each. GPU has much higher computing capability using large number of calculation units in parallel, however, one of its disadvantage is conditional branch.

NVidia's GPU synchronously run 32 of execution units (that is called as SM:Streaming-Multiprocessor) like as a SIMD operations. In the case when device code contains conditional-branch part, a particular thread has 'true' on the condition, and other thread has 'false' on the condition, then, all the threads execute both of true-block and false-block and result of the block to be skipped shall be ignored. Thus, we cannot ignore the cost to execute branch statement within GPU device, especially, if-block is big.

PostgreSQL has internal representation of WHERE clause as tree-structure, and we scan the tree-structure using switch statement on execute them. It shall be worst effectiveness.

Thus, PG-Strom adopts Just-in-time compile to generate native binary code of GPU to avoid execution control on GPU device.

When the supplied query tries to reference a foreign-table managed by PG-Strom, the query planner requires PG-Strom to generate execution plan. At that time, PG-Strom dynamically generate a source code towards GPU device, then kicks nvcc (compiler of NVidia's device) to build a native code of GPU device.

Of course, it shall be cached on shared memory to avoid execute compiler so frequently.

Next, when query-executor calls PG-Strom's executor, as I mentioned above, this native code shall be transferred to the device side with data read from pg_strom schema, and executed asynchronously.

The qualifiers of WHERE clause is already extracted on the planner stage, no need to handle a big switch statement.

We can confirm the automatically generated code of GPU device.

mytest=# EXPLAIN SELECT * FROM pgstrom_accounts

WHERE (xval - 23.45) * (xval - 23.45) +

(yval - 54.32) * (yval - 54.32) < 100;

QUERY PLAN

--------------------------------------------------------------

Foreign Scan on pgstrom_accounts (cost=2.00..0.00 rows=1000 width=368)

Required Cols : aid, bid, abalance, filler, xval, yval

Used in clause : xval, yval

1: typedef unsigned long size_t;

2: typedef long __clock_t;

3: typedef __clock_t clock_t;

4: #include "crt/device_runtime.h"

5:

6: typedef char bool_t;

7:

8: __global__ void

9: pgstrom_qual(unsigned char rowmap[],

10: double c5_values[],

11: unsigned char c5_nulls[],

12: double c6_values[],

13: unsigned char c6_nulls[])

14: {

15: int offset_base = blockIdx.x * blockDim.x + threadIdx.x;

16: int offset = offset_base * 8;

17: unsigned char result = rowmap[offset_base];

18: unsigned char errors = 0;

19: unsigned char cn5 = c5_nulls[offset_base];

20: unsigned char cn6 = c6_nulls[offset_base];

21: int bitmask;

22:

23: for (bitmask=1; bitmask < 256; bitmask <<= 1)

24: {

25: double cv5 = c5_values[offset];

26: double cv6 = c6_values[offset];

27:

28: if ((result & bitmask) &&

!((((cv5 - 23.45) * (cv5 - 23.45)) +

((cv6 - 54.32) * (cv6 - 54.32))) < 100))

29: result &= ~bitmask;

30: offset++;

31: }

32: rowmap[offset_base] = (result & ~errors);

33: }

(36 rows)

Publication

Right now, it is in public at GitHub. GPLv3 is applied.

https://github.com/kaigai/pg_strom

Even though it is a prototype, thus, its specification depends on my feeling, and we cannot expect documentation for a while, if you'd like to use, please call me (@kkaigai) on twitter.

A short demonstration

This is a short demonstration. The 't1' table is a regular table with 5-million records, and the 't2' table is a foreign table managed by PG-Strom also with 5-million records.

In the case of sequential-scan with complex qualifier, scan on 't2' was finished x10 times faster than the case of 't1'.