Posters flying on GTC2017

I'm on GTC(GPU Technology Conference) this year again.

I didn't plan to trip because of travel budget, so only posters are submitted and approved.

(Posters are shown regardless of the attendance of the presenter.)

But I got an e-mail below...

Hi Kohei,

After extensive review from multiple committees and nearly 140 posters, your poster has been chosen as a Top 5 Finalist. Congratulations!!

Eventually, I arranged a trip in hurry, then now in San Jose.

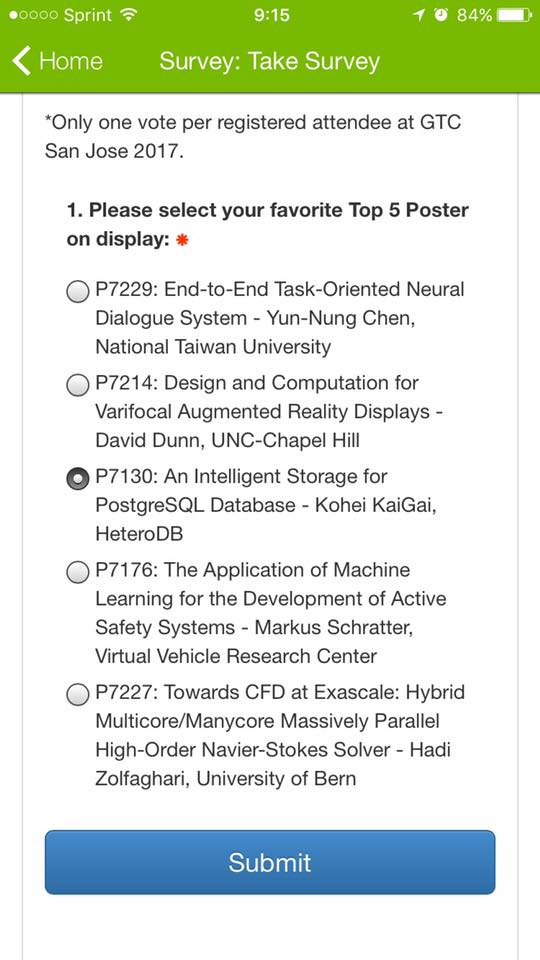

The top-5 posters are shown at most conspicuous place of the convention center.

Participants can vote one of their favor poster, then the best poster this year is chosen through the democratic process. :-)

I and other top-20 presenters had a short talk on evening of the 1st day of the conference.

4-minutes are given to each presenter, and we introduce their research in this short time-slot.

(It is shorter than usual lightning talks!)

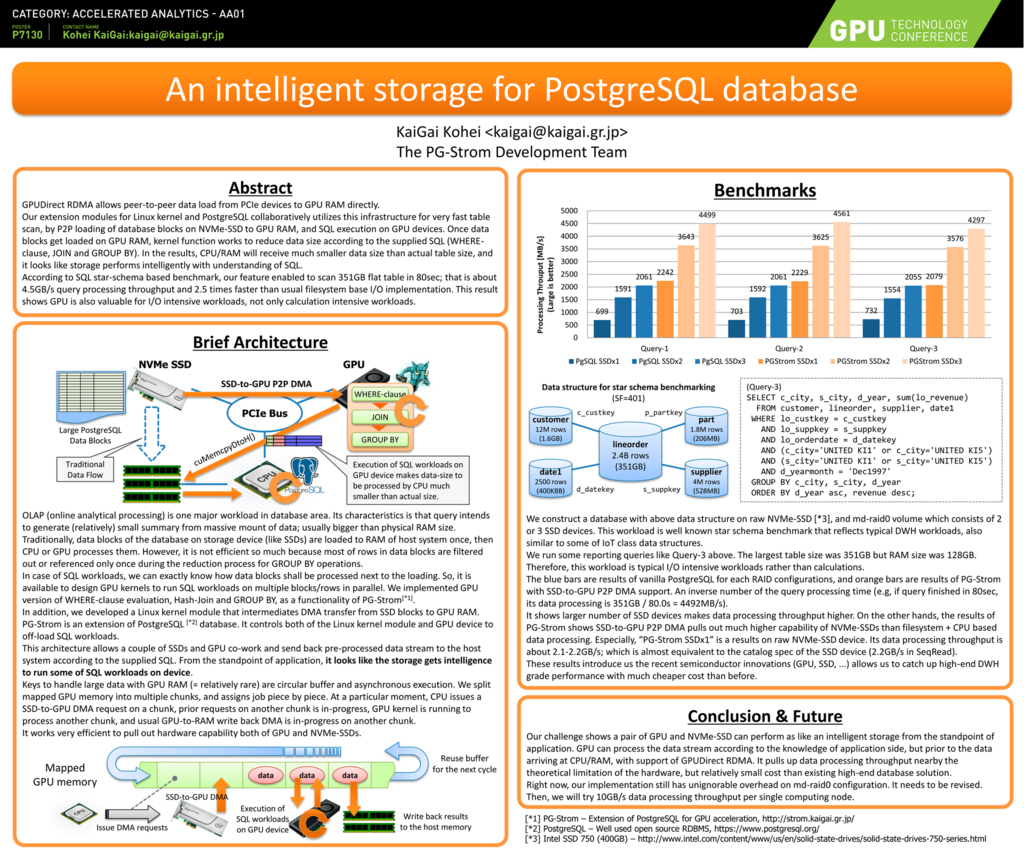

Our challenge tries to de-define the role of GPU. People usually considers GPU is a powerful tool to tackle computing intensive workloads. It is right, and widespread of workloads utilizes GPUs well like deep learning, simulations and so on.

On the other hands, I/O is one major workloads for database; including data analytics which try to handle massive amount of data.

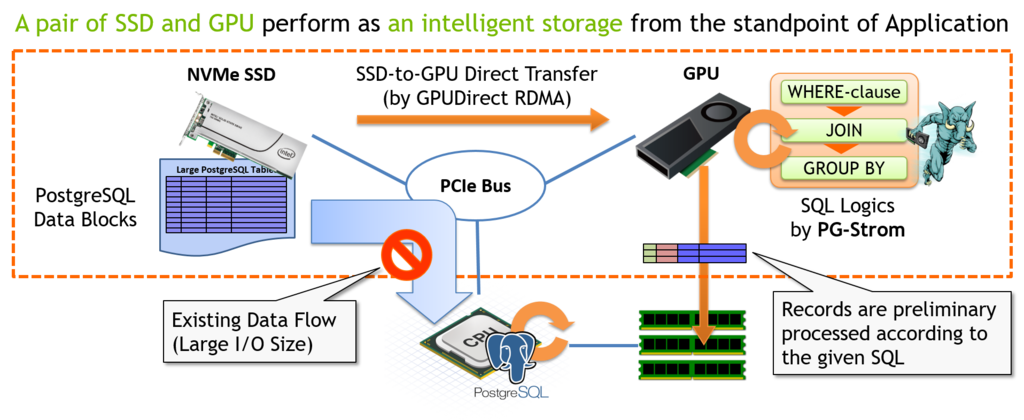

We designed and implemented a Linux kernel module that intermediates peer-to-peer data transfer between NVMe-SSD and GPU, on top of GPUDirect RDMA feature. It allows to load PostgreSQL's data blocks on NVMe-SSDs to GPU's RAM directly.

Once data blocks get loaded to GPU's RAM, we already have SQL execution engine on GPU devices.

It means we can process earlier stage of SQL execution prior to data loading onto the system main memory. The earlier stage includes WHERE-clause, JOIN and GROUP BY. They likely work to reduce amount of data.

For example, when an WHERE-clause filters out 90% of rows (it is not a strange assumption in reporting queries) on scan of 100GB tables, we can say 90GB of I/O bandwidth and dist cache to be wipe out are consumed by junk data.

Once data blocks are loaded to GPU, it can filter out unnecessary rows (and processes JOIN and GROUP BY), then write back the results of this pre-process to host memory. The data blocks written back contains all valid data because unnecessary data are already dropped off.

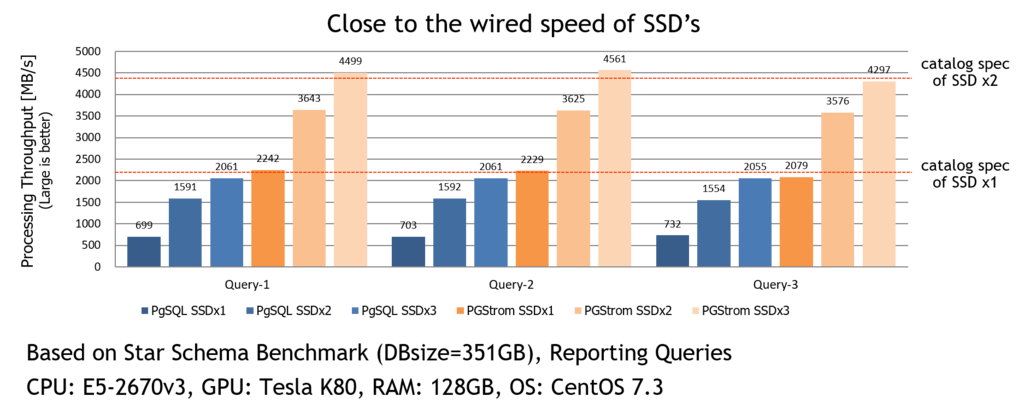

In the results, it performs nearly wired speed of NVMe-SSD.

In case of single SSD benchmark, query execution throughput by vanilla PostgreSQL is around 700MB/s. On the other hands, SSD-to-GPU Direct SQL-execution runs same query with 2.2GB/s; which is almost identical with catalog spec of Intel SSD 750 (SeqRead: 2200MB/s).

In case of multi SSDs cases, it also increases the performance, however, deeper investigation *1 and improvement for md0-raid support are needed.

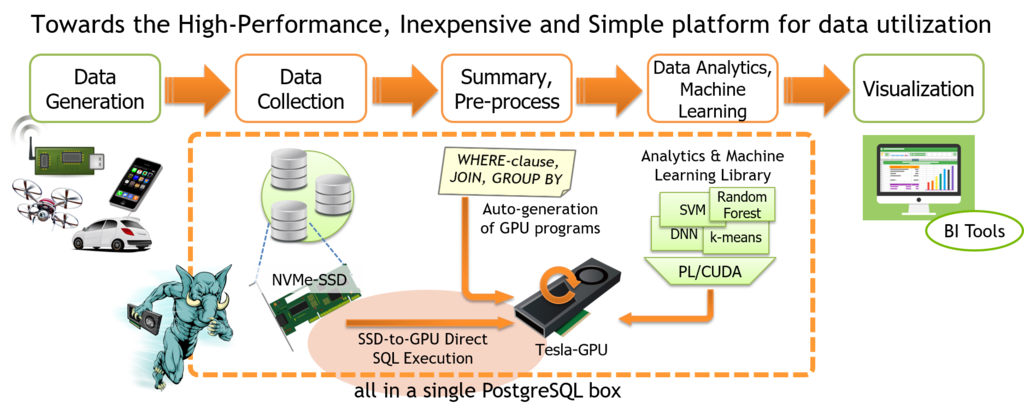

We intend to position this technology to make summary and pre-process of raw data/logs generated by sensor devices, mobile devices and so on. Once logs get summarized, we can apply statistical-analytics / machine-learning algorithms.

This type of workloads are advantaged with PL/CUDA which is one other our feature to utilize GPUs in-database analytics.

Eventually, GPU-accelerated PostgreSQL will be capable to handle entire life-cycle of data utilization (generation - gathering - summarizing/pre-process - analytics/machine-learning - visualization).

It makes system configuration much simple, reduce cost for production and operation, and deliver the power of analytics to wider and wider people.

My poster is below (enlarge by click):

If you are favored with our development, don't forget to vote.

Enjoy your GTC2017!

(Comment to the above photo by my wife: it looks like a zombie.)

*1:At that time, we measured with Teska K80; that is a bit old.