I have recently worked on development of FDW for Apache Arrow files; including SSDtoGPU Direct SQL support of PG-Strom. Apache Arrow is a column-oriented data format designed for application independent data exchange, supported by not a small number of "big-data" software.

The latest revision of PG-Strom can use Apache Arrow files as data source of the query execution, through the Arrow_Fdw feature. This article introduces the brief overview of Arrow_Fdw, its benchmark results and our future plan.

What is Arrow_Fdw

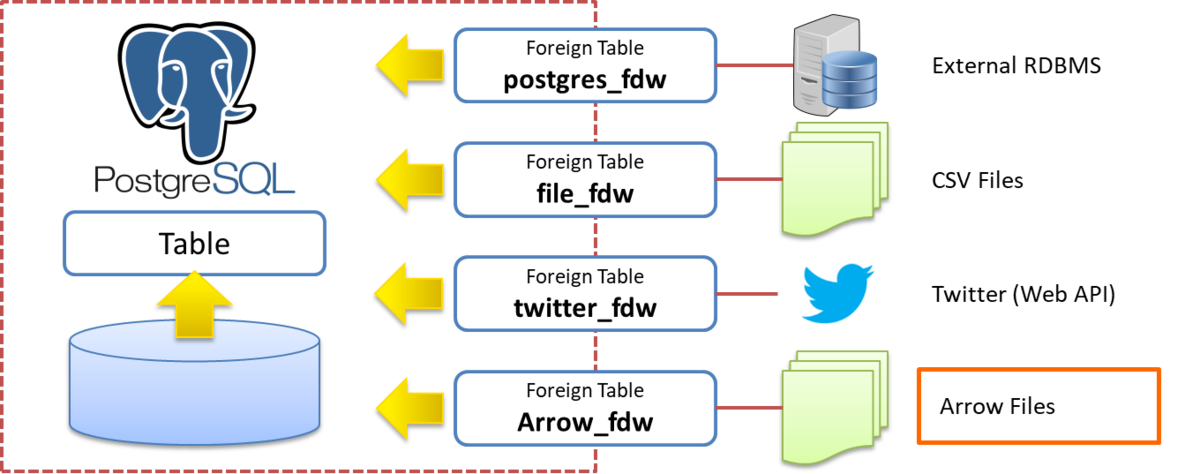

PostgreSQL supports FDW (Foreign Data Wrapper) mechanism that enables to read variable external data source as if here is a normal table. Some kind of FDW also support writing.

Of course, external data source has different data structure from the internal representation of PostgreSQL rows / columns. For example, CSV file is a way to represent structured data based on comma separated text. PostgreSQL cannot understand this format without proper conversion, so file_fdw extension intermediate the world of PostgreSQL and the world of CSV file.

This mechanism can be applied variable kind of data sources. Here is postgres_fdw that uses a remote PostgreSQL server as data source. An unique one is twitter_fdw that fetches tweets from the Twitter timeline via WebAPI.

The Arrow_Fdw maps files in Apache Arrow file for references by SQL.

Below is an example to map an Arrow file (/opt/nvme/t.arrow) using Arrow_Fdw.

postgres=# IMPORT FOREIGN SCHEMA hoge

FROM SERVER arrow_fdw

INTO public OPTIONS (file '/opt/nvme/t.arrow');

IMPORT FOREIGN SCHEMA

postgres=# \d hoge

Foreign table "public.hoge"

Column | Type | Collation | Nullable | Default | FDW options

--------+---------+-----------+----------+---------+-------------

id | integer | | | |

aid | integer | | | |

bid | integer | | | |

ymd | date | | | |

cat | text | | | |

md5 | text | | | |

Server: arrow_fdw

FDW options: (file '/opt/nvme/t.arrow')Of course, you can use CREATE FOREIGN TABLE command as usual, however, Arrow file contains its schema definition, and columns-list must match exactly. Our recommendation is IMPORT FOREIGN SCHEMA because it automatically generates foreign table definition.

Overview of SSDtoGPU Direct SQL on Arrow_Fdw

PG-Strom has a special storage optimization mode called SSD-to-GPU Direct SQL. It utilizes P2P DMA over PCIe bus, on top of GPUDirect RDMA kernel APIs provided by NVIDIA.

Usually, PostgreSQL loads table's contents onto RAM from the storage, then processes the loaded data. In typical analytics workloads, not a small portion of the loaded data is filtered out. In other words, we consumes the narrow storage bandwidth to carry junks; that is waste of I/O resources.

When PG-Strom uses SSD-to-GPU Direct SQL, it loads table's contents to GPU's device memory directly, then GPU runs SQL workloads using its thousands cores in parallel. It likely reduce amount of data to be loaded onto the host system much much smaller than the original source.

Even if data source are Arrow files, overall mechanism is same. PG-Strom loads only referenced column data using P2P DMA, then GPU processes SQL workloads (WHERE-clause/JOIN/GROUP BY). Unlike row-data of PostgreSQL tables, it does not load unreferenced columns.

Benchmark

We run the Star Schema Benchmark (SSBM) with scale-factor=401 as usual. Its database size is 353GB in total. We exported its lineorder table into an Arrow file on NVME-SSD volume.

| model | Supermicro SYS-1019GP-TT |

|---|---|

| CPU | Intel Xeon Gold 6126T (2.6GHz, 12C) x1 |

| RAM | 192GB (32GB DDR4-2666 x6) |

| GPU | NVIDIA Tesla V100 (5120C, 16GB) x1 |

| SSD | Intel SSD DC P4600 (HHHL; 2.0TB) x3 (striped by md-raid0) |

| HDD | 2.0TB (SATA; 72krpm) x6 |

| network | 10Gb ethernet x2 ports |

| OS | Ret Hat Enterprise Linux 7.6 CUDA 10.1 + NVIDIA Driver 418.40.04 |

| DB | PostgreSQL v11.2 PG-Strom v2.2devel |

The benchmark results are below:

Due to column-oriented data structure, performance is variable a lot depending on the number of referenced columns.

The query response time becomes much faster than the previous row-data based benchmark results; 7.2-7.9GB/s by PG-Strom and 2.2-2.3GB/s by PostgreSQL with parallel-scan. The query execution throughput is an inverse of query response time. So, 49GB/s means that summarizing of sequential-scan results on 300GB+ table is finished about 6sec. 25GB/s also means similar workloads are finished about 12sec. Once we can achieve this grade of batch processing performance in a single node, it changes some assumptions when we consider system configurations.

Validation of the benchmark results

For confirmation, we like to validate whether the benchmark results are reasonable. Below is definition of the flineorder foreign table that maps an Arrow file using Arrow_Fdw.

The above benchmark results say it runs Q2_1 in 28.7GB/s. The Q2_1 references lo_suppkey, lo_orderdate, lo_orderpriority and lo_supplycost.

Foreign table "public.flineorder"

Column | Type | Size

--------------------+---------------+--------------------------------

lo_orderkey | numeric | 35.86GB

lo_linenumber | integer | 8.96GB

lo_custkey | numeric | 35.86GB

lo_partkey | integer | 8.96GB

lo_suppkey | numeric | 35.86GB <-- ★Referenced by Q2_1

lo_orderdate | integer | 8.96GB <-- ★Referenced by Q2_1

lo_orderpriority | character(15) | 33.61GB <-- ★Referenced by Q2_1

lo_shippriority | character(1) | 2.23GB

lo_quantity | integer | 8.96GB

lo_extendedprice | bigint | 17.93GB

lo_ordertotalprice | bigint | 17.93GB

lo_discount | integer | 8.96GB

lo_revenue | bigint | 17.93GB

lo_supplycost | bigint | 17.93GB <-- ★Referenced by Q2_1

lo_tax | integer | 8.96GB

lo_commit_date | character(8) | 17.93GB

lo_shipmode | character(10) | 22.41GB

FDW options: (file '/opt/nvme/lineorder_s401.arrow') ... file size = 310GBTotal size of the referenced columns is 96.4GB of 310GB (31.1%). So, raw storage read throughput is 96.4GB / 11.06s = 8.7GB/s. This is reasonable performance for 3x Intel DC P4600 NVME-SSDs.

Future Plan: Towards 100GB/s

We can say the current status is "just workable", so we have to put further works to improvement software quality for production grade.

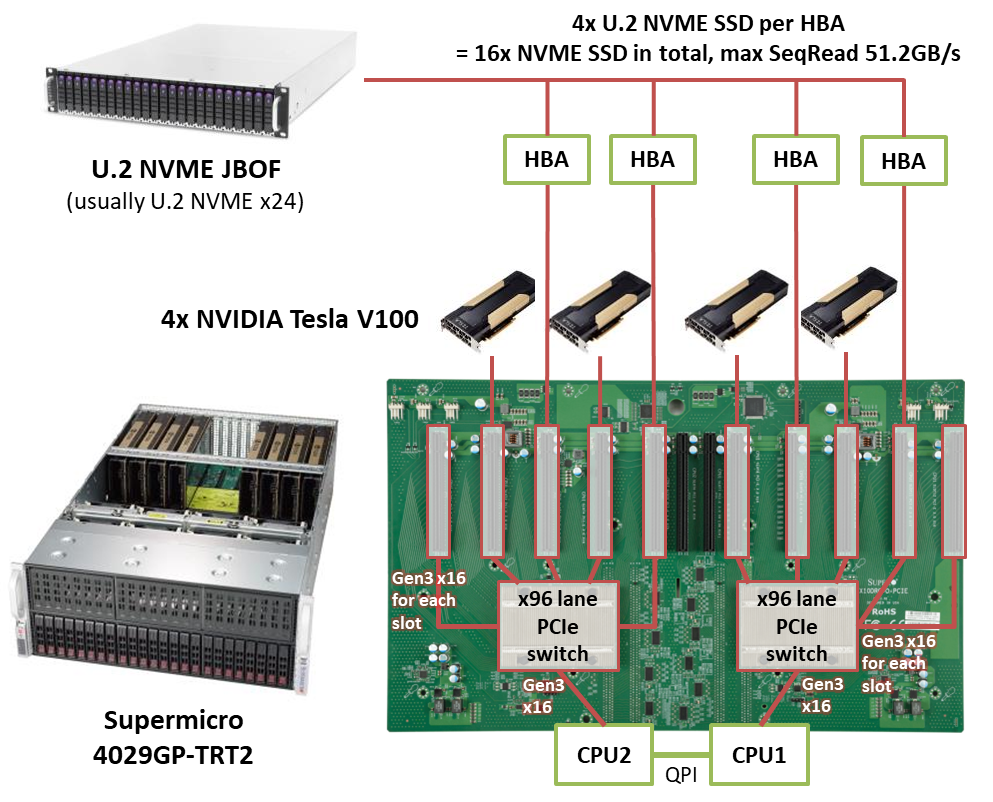

For more performance improvement, we like to have a benchmark activity using multi-GPU server like this.

This configuration uses Supermicro SYS-4029GP-TRT2 that is designed for HPC by RDMA optimization with PCIe switches. It allows to install up to 4x Tesla GPUs and 4x HBA card *1 to attach external NVME-SSD enclosure. An HBA card can connect 4x NVME-SSD, and can read the storage up to 12.8GB/s.

So, we can configure 4 of unit of 1xGPU + 4xSSD (12.8GB/s). It is 4xGPU + 16xSSD (51.2GB/s) in total.

If we can assume summarizing / analytic query read 1/3 columns in average, the upper limit of the query execution is 51.2GB/s / 0.33 = 150GB/s from the standpoint of raw storage performance.

Probably, 100GB/s is a feasible milestone for PostgreSQL, and we like to run the benchmark in 2019.